Activation Functions

Activation Functions

Gated Linear Unit

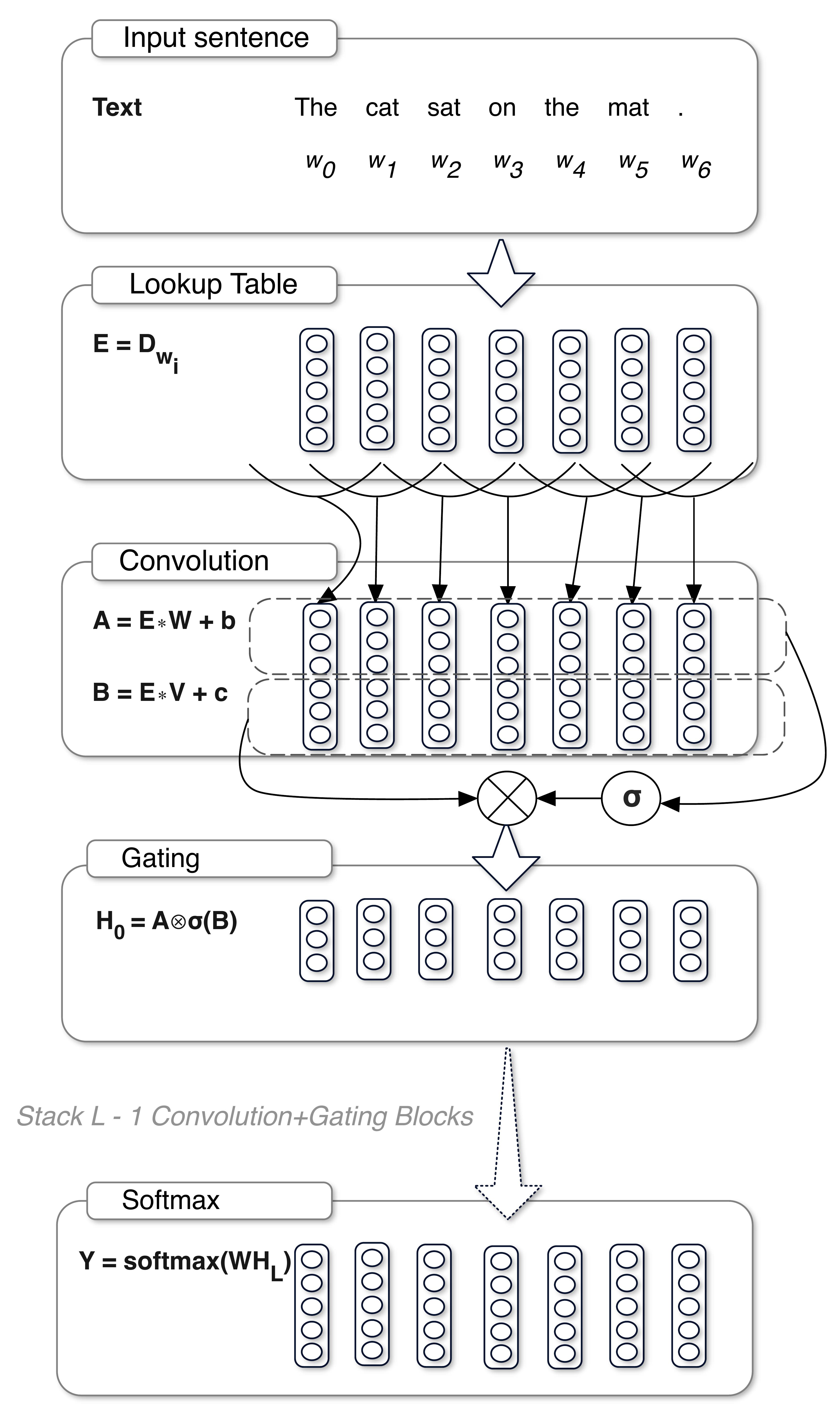

Introduced by Dauphin et al. in Language Modeling with Gated Convolutional NetworksA Gated Linear Unit, or GLU computes:

$$ \text{GLU}\left(a, b\right) = a\otimes \sigma\left(b\right) $$

It is used in natural language processing architectures, for example the Gated CNN, because here $b$ is the gate that control what information from $a$ is passed up to the following layer. Intuitively, for a language modeling task, the gating mechanism allows selection of words or features that are important for predicting the next word. The GLU also has non-linear capabilities, but has a linear path for the gradient so diminishes the vanishing gradient problem.

Source: Language Modeling with Gated Convolutional NetworksPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 93 | 8.93% |

| Question Answering | 60 | 5.76% |

| Decoder | 49 | 4.70% |

| Sentence | 40 | 3.84% |

| Text Generation | 38 | 3.65% |

| Retrieval | 33 | 3.17% |

| Translation | 26 | 2.50% |

| Machine Translation | 22 | 2.11% |

| Natural Language Understanding | 21 | 2.02% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |