Regularization

Regularization

Attention Dropout

Attention Dropout is a type of dropout used in attention-based architectures, where elements are randomly dropped out of the softmax in the attention equation. For example, for scaled-dot product attention, we would drop elements from the first term:

$$ {\text{Attention}}(Q, K, V) = \text{softmax}\left(\frac{QK^{T}}{\sqrt{d_k}}\right)V $$

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Retrieval | 84 | 9.68% |

| Language Modelling | 73 | 8.41% |

| Question Answering | 52 | 5.99% |

| Large Language Model | 42 | 4.84% |

| Sentence | 28 | 3.23% |

| Text Generation | 24 | 2.76% |

| In-Context Learning | 22 | 2.53% |

| Information Retrieval | 18 | 2.07% |

| Prompt Engineering | 16 | 1.84% |

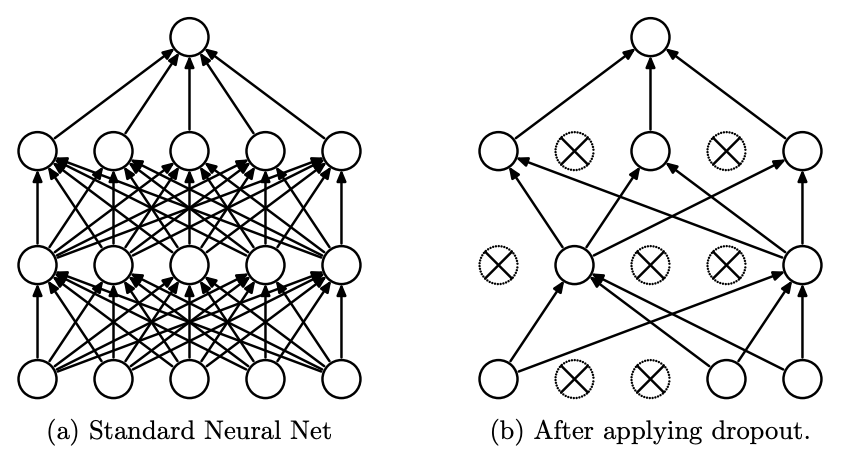

Dropout

Dropout